We have created the world's most well-read parrot. GPT-4 can pass the bar exam, write a sonnet in the style of Shakespeare, or generate complex Python code. But try asking it to plan a complex logistics route with changing conditions, and the magic vanishes.

The fundamental problem with modern Large Language Models (LLMs) lies in their architecture. The Transformer is, essentially, a next-word prediction machine. Autocomplete on steroids.

"The model doesn't 'understand' that 2+2=4. It just knows that after the tokens '2+2=', the highest probability token follows is '4'."

This probabilistic nature makes them brilliant at creativity and intuition (System 1), but absolutely unreliable at reasoning (System 2). They hallucinate facts with the same confidence they state truth because, for a neural network, the concept of "truth" does not exist—only probability.

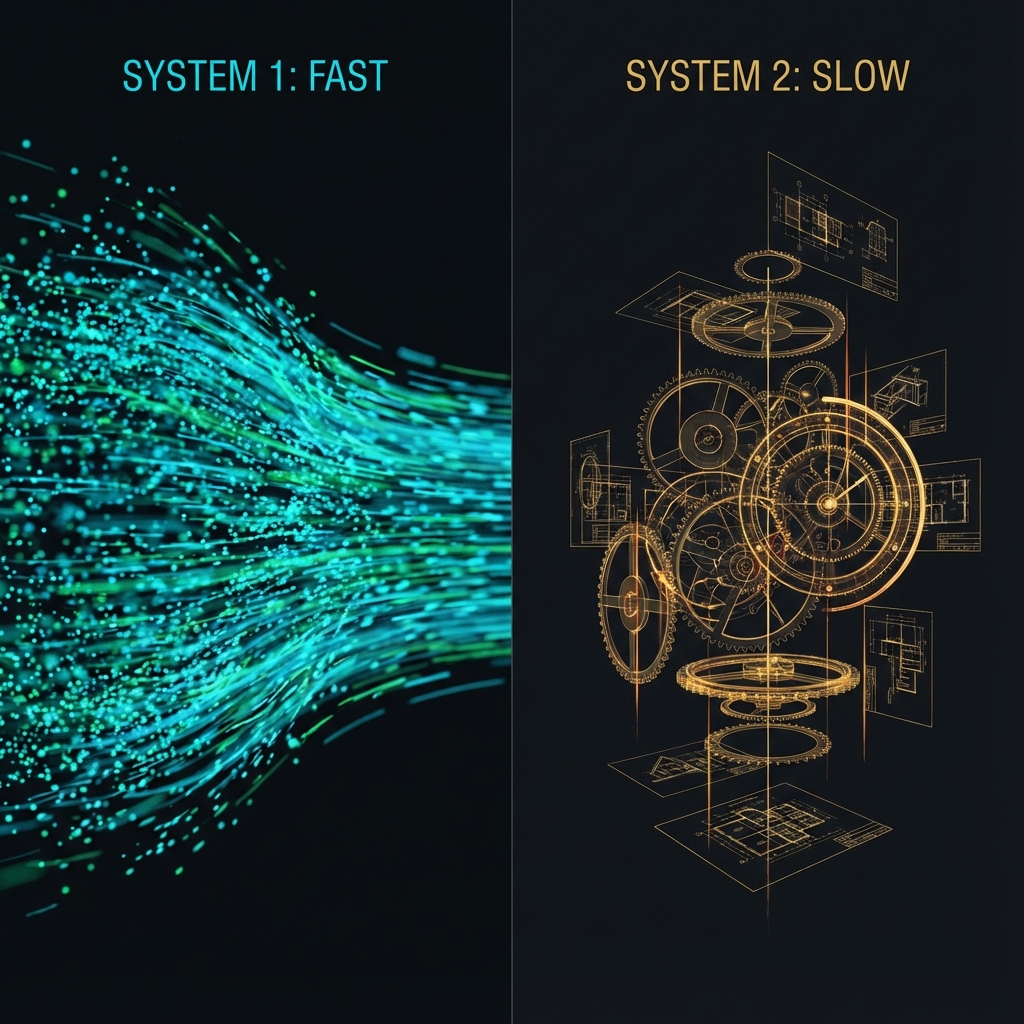

System 1 vs System 2

Nobel laureate Daniel Kahneman divided human thinking into two types:

- System 1 (Fast): Intuitive, automatic, unconscious. "What is 2+2?", "Recognize a friend's face". Works instantly but is prone to error.

- System 2 (Slow): Logical, sequential, effortful. "Multiply 17 by 24", "Plan a budget". Requires time and concentration.

Fig 1. The Dichotomy of Thinking Processes

The Missing Link

Modern AI is pure System 1. It generates an answer in milliseconds without "thinking" before speaking. It has no internal monologue, no scratchpad, no ability to stop and verify its conclusions.

At AIFusion, we are building architectures that possess System 2. These are networks that can:

- 01. Delay response to launch a solution search process.

- 02. Break a complex task into subtasks (decomposition).

- 03. Critically evaluate its intermediate result (self-reflection).

Neuro-Symbolic Synthesis

Pure neural networks are bad at logic. Old symbolic AI (GOFAI) was good at logic but helpless in the real world. The future lies in their unification.

We integrate neural modules (for perception and intuition) with rigid symbolic solvers (for math and formal logic). This allows the system not to hallucinate during calculations but to refer to a built-in "calculator" or knowledge base, exactly as a human does.

Recursion and Thought Loops

A classic transformer is a feed-forward network: input -> layers -> output. One pass. A cognitive architecture must be recursive. It must be able to cycle information, refining and improving it.

We implement "Chain of Thought" mechanisms not as prompt engineering, but as a fundamental architectural primitive. The network generates hidden thoughts, evaluates them, discards dead-end branches, and only then forms the final response to the user.

Foundational Research

Theoretical basis for our cognitive architectures.

General Theory of Stupidity

Analysis of cognitive biases in AI systems. Why "smart" models make idiot decisions and how to implement architectural "rationality".

Read PaperRecursive Reasoning Nets

Upcoming publication on the implementation of recursive thought loops in multimodal agents.

Coming Soon